Using Google Cloud Platform (GCP) in a multi-cloud transformation demonstrates the sophistication and power of its Big Data Platform (DataProc & more) to drive cost and stability optimization resulting in 205% return on cloud costs and 168% return on run time.

The Power of GCP in a Multi-Cloud World

Introduction

When making a decision for your organization on where to host your applications and store your data, there are several factors to consider. This decision has become more nuanced, as highlighted in a survey by Gartner in 2019[1], where they found that 81% of organizations today are using multiple cloud providers and their provider of choice can depend on the specific use case.

In 2020, Kin + Carta went on a journey with Google and a large investment research firm that offers digital product solutions to determine the core benefits of a multi-cloud environment, whilst highlighting the specific scenarios where GCP provided a significant value proposition over its competitors.

Background

This global financial services research firm provides a range of research, risk and investment management service offerings.

Kin + Carta and Google were engaged by this firm’s quantitative risk team, who provide risk portfolio analysis to its customers in an effort to improve their overall investment decisions.

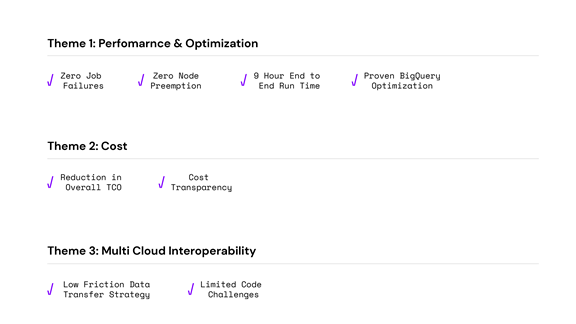

This quantitative risk team had significant challenges with their current single cloud platform and wanted to align on an architecture to address the following core problem spaces:

- Performance, Stability and Optimization

- Cloud Costs

- Multi-Cloud Interoperability

To create a successful outcome, we identified and aligned on a North Star and Key Success Criteria to drive to measurable results.

North Star and Key Success Criteria

We defined our Engagement North Star as follows: ‘Create a more automated execution of the risk model, provide better execution stability, more transparency in pricing and lower the total cost of ownership, whilst ensuring zero friction in terms of multi-cloud interoperability.’ We then used this to drive our success criteria on the engagement:

Additional Considerations

Orchestration, Monitoring and Logging

Orchestration is critical to improving TCO by reducing manual effort to run the end to end model. Monitoring at a job and instance level is key to ensuring we have transparency into failures and performance concerns. With logging in place we can reduce cost of triage and improve manageability of the platform.

Code Standardization

With cloud agnostic code we can write once and release anywhere, making a multi-cloud environment more sustainable and providing better long term options to the business.

Recovery

Ensuring that we can automatically recover our risk model run from the point of failure is critical and results in reduced costs and improved end to end performance.

Dynamic Resource Allocation

Finally, our infrastructure design must be intelligent to allow us to scale depending on our resource needs.

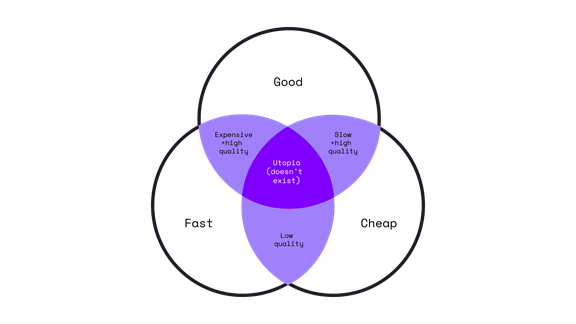

The Iron Triangle

When assessing options that best serve your needs you will almost always find that you are constrained by the iron triangle of good, fast and cheap.

We find that the best approach is as follows:

- Choose a problem statement to solve for.

- For that problem statement, define the top two priorities from the iron triangle (good, fast, cheap).

- Move the lever so that the solution you put in place solves for these two criteria and you will almost always have to compromise on the third.

- For this engagement, we focused intensely on good and fast to achieve maximum stability and with this focus we came in with comparable cost against the current cloud solution.

Our Solution- Achieving our North Star & Success Criteria

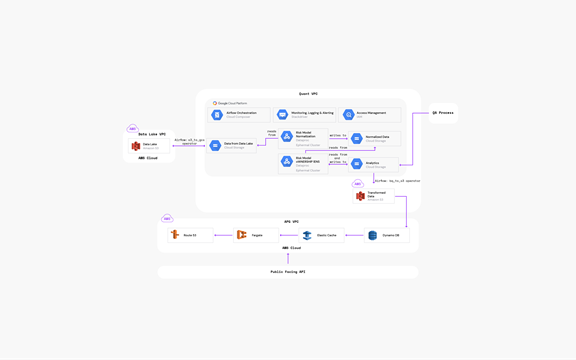

The Orchestration Layer

GCS Service: Cloud composer

- This is a fully managed workflow orchestration tool that runs a service called Airflow. The core function of this service is to build out a collection of the tasks you want to run, organized in a way that reflects their relationships and dependencies.

Solution Specifics:

- For this engagement we created three pipelines to transfer input data from S3 to GCS, to run the spark jobs and to transfer output data to S3 from GCS.

Ingestion from AWS to GCP

GCP Service: Cloud Transfer Service

- This is a service to manage large-scale data transfers easily, securely, and efficiently. Storage Transfer Service and Transfer Service for on-premises data offer two highly performant pathways to Cloud Storage—both with the scalability and speed you need to simplify the data transfer process.

Solution Specifics:

- In the existing system, all the input data is generated by various ETL tools which write them into Amazon S3 buckets.

- On our multi-cloud solution we orchestrated the entire data transfer from S3 to GCS using Airflow to run the GCP Storage Transfer Service.

Risk Model Calculation

GCP Service: DataProc

- Dataproc is a fully managed Apache Spark, Apache Hadoop and other OSS service that allows for automated cluster management, containerized OSS jobs and enterprise security.

- Dataproc makes open source data and analytics processing fast, easy and more secure in the cloud.

Solution Specifics:

- Our solution automatically creates an ephemeral dataproc cluster by passing the cluster configuration information through airflow variables.

- Risk portfolio jobs are then submitted using custom operators which refer to python files stored in GCS.

- Once the job has run successfully, we use Airflow to automatically scale down our cluster to ensure low cost and optimum use of resources.

Egress from AWS to GCP

GCP Service: Cloud Transfer Service

Solution Specifics:

- Similar to data ingress, we leveraged Airflow to orchestrate the egress of data from GCS back to S3.

- To optimize the performance of the transfer, we leverage a parallel transfer process where Airflow orchestration is designed to submit the transfer of multiple data objects inside the bucket at the same time in parallel.

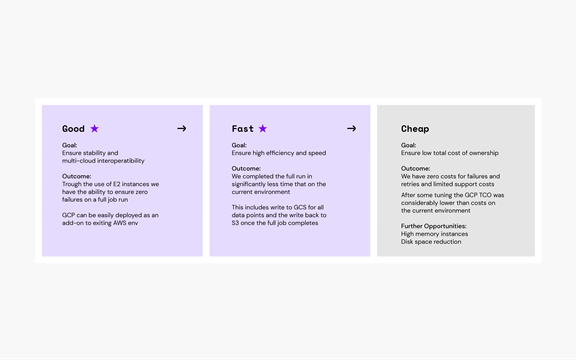

Our Results

Overview

- Long running jobs are not a good fit for a spot instance or ephemeral architecture.

- Instance loss, and as a result job failures, create a cascading effect of issues which can result in higher total cost of ownership.

- Lack of transparency in terms, cost and time makes optimization more challenging.

Core Findings

Key Takeaways

- Our solution, built on GCP and fully interoperable with AWS, gives us better performance, more stability and comparable cost to the current solution

- Further performance improvements and cost reductions are possible with additional changes to cluster configuration and by leveraging GCP Big Query for running the risk models

In Summary

What We Learned

- Aligning on a NorthStar and establishing clear and measurable criteria are the key of any project. Cloud modernization is no different.

- Leveraging a cloud provider for its strengths is critical to gain a strong foundation for a multi-cloud deployment.

- Public cloud interoperability isn’t as large of a challenge as it once was and organizations need to take advantage of best in breed between public cloud offerings to gain scale and cost optimization.

- While transformations (resign for specific cloud use) of cloud workloads can deliver more ROI (3-5x returns), there are quicker wins with using a multi-cloud approach for reshaping the workload given near term relief to existing challenges.

Contributing Authors