At Kin + Carta, we have been experimenting and building a multitude of Skills to both increase our own knowledge, and validate our thinking as to how Alexa, and the Echo, could be used to help our clients better serve their customers. You can see a sneak preview of one such experiment, prototyping Skills for personal banking.

Rolling up our sleeves and building Skills has been critical: after all, there are some things Alexa can’t tell us herself - things that our strategists, designers, and engineers needed to discover.

This includes the fact that some services and interactions fit this new channel perfectly, while others are simply not suited to an invisible interface at all. In that time, we have also learned a great deal about what works for Alexa, what does not - and how to build a robust, great Skill.

In an upcoming post, I am going to share the ten things that Alexa didn’t tell us but that we have uncovered in the course of our work. However, before we share some of these lessons with you, let’s introduce ourselves to Alexa properly. What is Alexa? And how does Alexa work?

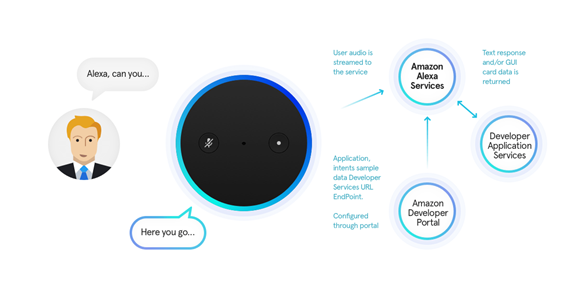

The Amazon Echo is a hands-free, in-home speaker. The Echo device connects to the cloud-hosted Alexa Voice Service (AVS), which allows you to complete a range of smart tasks simply by beginning a question or issuing a command with the Wake Word, ‘Alexa’. Think of Alexa as a voice-enabled personal assistant, and the Amazon Echo as one of her physical ‘homes’. Alexa also lives inside two smaller speakers: Amazon Tap (at the time of writing, currently unavailable in the UK), and the Amazon Dot, as well as a growing range of devices from other manufacturers.

Alexa is the core of the service, and Amazon has been keen to make it as accessible to a wide range of use cases by offering software libraries that can be embedded within any suitable custom hardware - from an iPhone to a Raspberry Pi.